24강 - 순환 신경망으로 IMDB 리뷰 분류하기

2021. 7. 8. 01:27ㆍICT 멘토링/혼자 공부하는 머신러닝+딥러닝

24강 - 순환 신경망으로 IMDB 리뷰 분류하기

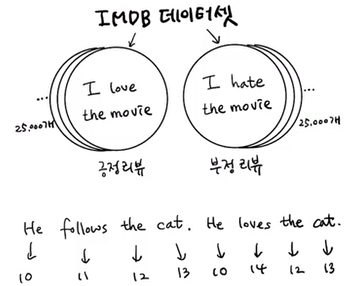

IMDB 리뷰 데이터셋

영화 평점 소개 사이트.

리뷰 댓글을 모아 데이터로 만듦.

감성 분석.

긍정리뷰(양성샘플), 부정리뷰(음성샘플)로 25,000개씩 잘 나뉘어져 있음.

- NLP

- 말뭉치-데이터셋

- 토큰-단어

- 어휘 사전

케라스로 IMDB 데이터 불러오기

from tensorflow.keras.datasets import imdb

(train_input, train_target), (test_input, test_target) = imdb.load_data(

num_words=500)

print(train_input.shape, test_input.shape)

#//(25000,) (25000,)

print(train_input[0])

#//[1, 14, 22, 16, 43, 2, 2, 2, 2, 65, 458, 2, 66, 2, 4, 173, 36, 256, 5, 25, 100, 43, 2, 112, 50, 2, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 2, 2, 17, 2, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2, 19, 14, 22, 4, 2, 2, 469, 4, 22, 71, 87, 12, 16, 43, 2, 38, 76, 15, 13, 2, 4, 22, 17, 2, 17, 12, 16, 2, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2, 2, 16, 480, 66, 2, 33, 4, 130, 12, 16, 38, 2, 5, 25, 124, 51, 36, 135, 48, 25, 2, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 2, 15, 256, 4, 2, 7, 2, 5, 2, 36, 71, 43, 2, 476, 26, 400, 317, 46, 7, 4, 2, 2, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2, 56, 26, 141, 6, 194, 2, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 2, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 2, 88, 12, 16, 283, 5, 16, 2, 113, 103, 32, 15, 16, 2, 19, 178, 32]

print(train_target[:20])

#//[1 0 0 1 0 0 1 0 1 0 1 0 0 0 0 0 1 1 0 1]훈련 세트 준비

train_input, val_input, train_target, val_target = train_test_split(

train_input, train_target, test_size=0.2, random_state=42)

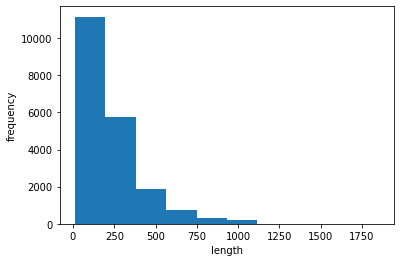

lengths = np.array([len(x) for x in train_input])

print(np.mean(lengths), np.median(lengths))

#//239.00925 178.0plt.hist(lengths)

plt.xlabel('length')

plt.ylabel('frequency')

plt.show()

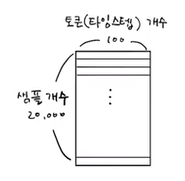

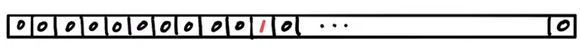

시퀀스 패딩

from tensorflow.keras.preprocessing.sequence import pad_sequences

train_seq = pad_sequences(train_input, maxlen=100)

print(train_seq.shape)

#//(20000, 100)

print(train_seq[0])

#//[ 10 4 20 9 2 364 352 5 45 6 2 2 33 269 8 2 142 2

#// 5 2 17 73 17 204 5 2 19 55 2 2 92 66 104 14 20 93

#// 76 2 151 33 4 58 12 188 2 151 12 215 69 224 142 73 237 6

#// 2 7 2 2 188 2 103 14 31 10 10 451 7 2 5 2 80 91

#// 2 30 2 34 14 20 151 50 26 131 49 2 84 46 50 37 80 79

#// 6 2 46 7 14 20 10 10 470 158]print(train_input[0][-10:])

#//[6, 2, 46, 7, 14, 20, 10, 10, 470, 158]

print(train_seq[5])

#//[ 0 0 0 0 1 2 195 19 49 2 2 190 4 2 352 2 183 10

#// 10 13 82 79 4 2 36 71 269 8 2 25 19 49 7 4 2 2

#// 2 2 2 10 10 48 25 40 2 11 2 2 40 2 2 5 4 2

#// 2 95 14 238 56 129 2 10 10 21 2 94 364 352 2 2 11 190

#// 24 484 2 7 94 205 405 10 10 87 2 34 49 2 7 2 2 2

#// 2 2 290 2 46 48 64 18 4 2]

순환 신경망 모델 만들기

from tensorflow import keras

model = keras.Sequential()

model.add(keras.layers.SimpleRNN(8, input_shape=(100, 500)))

model.add(keras.layers.Dense(1, activation='sigmoid'))원-핫 인코딩

train_oh = keras.utils.to_categorical(train_seq)

print(train_oh.shape)

#//(20000, 100, 500)

print(train_oh[0][0][:12])

#//[0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

print(np.sum(train_oh[0][0]))

#//1.0

val_oh = keras.utils.to_categorical(val_seq)모델 구조 확인

model.summary()

#// Model: "sequential"

#// _________________________________________________________________

#// Layer (type) Output Shape Param #

#// =================================================================

#// simple_rnn (SimpleRNN) (None, 8) 4072

#// _________________________________________________________________

#// dense (Dense) (None, 1) 9

#// =================================================================

#// Total params: 4,081

#// Trainable params: 4,081

#// Non-trainable params: 0

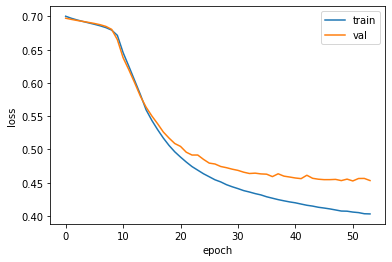

#// _________________________________________________________________모델 훈련

rmsprop = keras.optimizers.RMSprop(learning_rate=1e-4)

model.compile(optimizer=rmsprop, loss='binary_crossentropy',

metrics=['accuracy'])

checkpoint_cb = keras.callbacks.ModelCheckpoint('best-simplernn-model.h5',

save_best_only=True)

early_stopping_cb = keras.callbacks.EarlyStopping(patience=3,

restore_best_weights=True)

history = model.fit(train_oh, train_target, epochs=100, batch_size=64,

validation_data=(val_oh, val_target),

callbacks=[checkpoint_cb, early_stopping_cb])

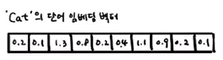

임베딩

model2 = keras.Sequential()

model2.add(keras.layers.Embedding(500, 16, input_length=100))

model2.add(keras.layers.SimpleRNN(8))

model2.add(keras.layers.Dense(1, activation='sigmoid'))

model2.summary()

#// Model: "sequential_1"

#// _________________________________________________________________

#// Layer (type) Output Shape Param #

#// =================================================================

#// embedding (Embedding) (None, 100, 16) 8000

#// _________________________________________________________________

#// simple_rnn_1 (SimpleRNN) (None, 8) 200

#// _________________________________________________________________

#// dense_1 (Dense) (None, 1) 9

#// =================================================================

#// Total params: 8,209

#// Trainable params: 8,209

#// Non-trainable params: 0

#// _________________________________________________________________

참고자료

https://www.youtube.com/watch?v=YIrpKw04ic8&list=PLVsNizTWUw7HpqmdphX9hgyWl15nobgQX&index=24

'ICT 멘토링 > 혼자 공부하는 머신러닝+딥러닝' 카테고리의 다른 글

| 25강 - LSTM과 GRU 셀 (0) | 2021.07.08 |

|---|---|

| 23강 - 순차 데이터와 순환 신경망 (0) | 2021.07.08 |

| 22강 - 합성곱 신경망의 시각화 (0) | 2021.07.08 |

| 21강 - 합성곱 신경망을 사용한 이미지 분류 (0) | 2021.07.08 |

| 20강 - 합성곱 신경망의 개념과 동작 원리 배우기 (0) | 2021.07.08 |